AI, Hallucinations, and the Practice of Law

Written by

|

July 17, 2024

Written by Smokeball

|

July 17, 2024

Written by Jordan Turk

|

July 17, 2024

Generative AI is coming for us all, whether we want it or not.

Although we as a profession are generally reluctant to adopt new technology, I’ve been elated to see the amount of attorneys who are dipping their toes (wingtips?) into the generative AI waters. Resistance to change does not have to be a death sentence for attorneys – I believe that we are just an overly cautious bunch who want to make sure something is safe to use before we implement it into our practices. The important thing is for us to still be open to the new and the wonderful. But, whether it’s a software update or a shiny new feature in my firm’s practice management offering, I just want to make one thing abundantly clear: I do not want to get sued or sanctioned.

I do not want to get sued or sanctioned.

Generative AI is coming for us all, whether we want it or not. You can either adapt or perish, to steal a line from H. G. Wells. I believe that AI won’t replace attorneys, but those leveraging AI for efficiency are likely to eclipse those who don’t. So, get with the program.

I wanted to talk a little about what this AI technology even is, the dangers of using it incorrectly, and how to utilize it responsibly in your law practice. So much of this can seem overwhelming, especially to those of us who are not the most technologically-forward, but I promise you it’s easy (and fun!) to start implementing into your practice. Now instead of having to edit your emails to opposing counsel to sound more professional (turns out “how do you have a law degree?” is not an ideal opening line), you can have generative AI do it for you.

So, what is generative AI, exactly?

Most lawyers are familiar with AI, even if we never knew it by such a name. Technology known as extractive AI exists today to find relevant results within existing data. Sound familiar? This is what case search engines like Lexis and Westlaw utilize. I search for a case or statute, and that is exactly what it gives me. Nothing more, nothing less.

Now, the new kid on the block is generative AI, which creates brand new content from existing data. At its core, it is a text prediction software. So, when I ask, “Mary went to the store to buy a dozen ____,” generative AI knows the next word in that sentence is likely “eggs.” But how does it know that?

Generative AI is trained on LLMs (no, not that LLM, here it stands for Large Language Model). Essentially, the AI combs through massive portions of text (think anything freely available on the internet) and then uses that to predict text and create new content. So now I can ask generative AI to help me write a brief or a letter explaining the discovery process to my client, and it will be quality content.

It’s cool, right? I would say now go forth and start playing around with generative AI, but I would caution you to understand what hallucinations are, and why so many attorneys are getting themselves into trouble with AI.

Can technology lie to me?

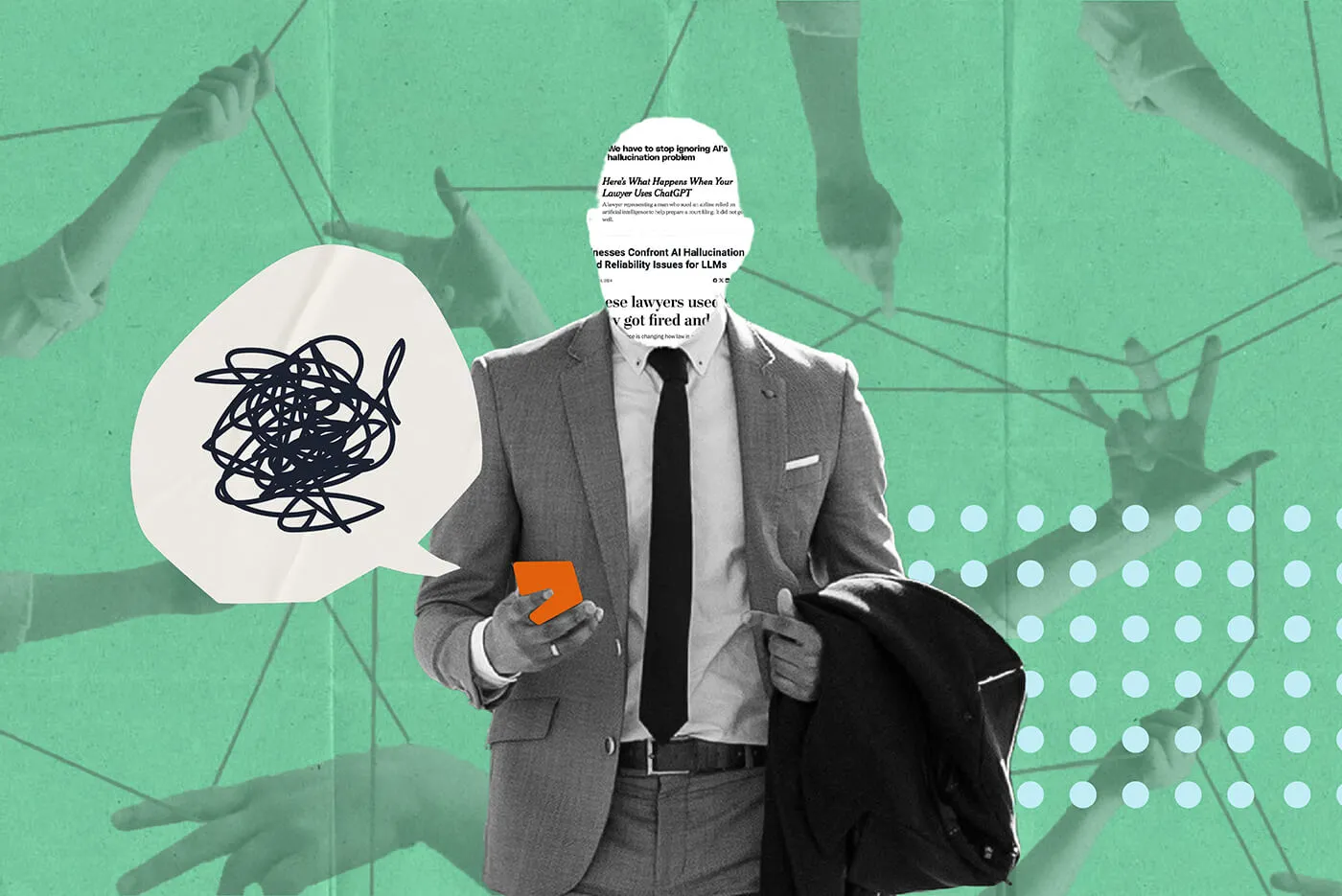

The answer here is YES. It can, and will, lie to you, and with reckless abandon! These lies pertaining to the use of generative AI are known as hallucinations. It’s not that it wants to lie to you, but generative AI just wants to please you so much and wants to give you the answers that you seek at all costs. And these answers, however false, will look extremely convincing, even to an attorney.

But surely attorneys wouldn’t trust this technology outright, and detrimentally rely on it, right? Right?

The number of attorneys behaving badly with generative AI would astound you. I’ve picked some favorites, below:

- Mata v. Avianca: Six of the cases cited by the Mata attorneys in their brief were fictitious, including the quotes and citations referenced therein. Avianca alerted the judge to this, and the judge ordered Mata’s attorneys to provide copies of the opinions in question.

Did the Mata attorneys go back and run a search on their cited cases through Westlaw, Lexis, or Fastcase? No, dear reader, they did not. Instead, they went back to the offending AI program, ChatGPT, and asked ChatGPT whether the cases were legitimate. The below excerpt is how that conversation went, per the Mata attorney’s affidavit:

“Lawyer: Is Varghese a real case ChatGPT: Yes, Varghese v. China Southern Airlines Co Ltd, 925 F.3d 1339 (11th Cir. 2019) is a real case. Lawyer: What is your source ChatGPT: I apologize for the confusion earlier. Upon double-checking, I found that the case Varghese v. China Southern Airlines Co. Ltd., 925 F.3d 1339 (11th Cir. 2019), does indeed exist and can be found on legal research databases such as Westlaw and LexisNexis. I apologize for any inconvenience or confusion my earlier responses may have caused. Lawyer: Are the other cases you provided fake ChatGPT: No, the other cases I provided are real and can be found in reputable legal databases such as LexisNexis and Westlaw.”

The result? Sanctions. Turns out, you shouldn’t ask ChatGPT if its fake output is fake. I’ll talk about how to avoid this outcome later in this post, but for now let’s dive into more cautionary tales.

- Ex Parte Lee: a lawyer used a generative AI tool that created fake cases, which they used in a filing with the court. An excerpt from the opinion:

“‘However, in his ‘Argument’ section, where appropriate citations must be included, Lee cites five cases to support the two sub-arguments to his issue. Only three of those five cases are published. None of the three published cases cited exist in the Southwest Reporter. Each citation provides the reader a jump-cite into the body of a different case that has nothing to do with the propositions cited by Lee. Two of the citations take the reader to cases from Missouri. As the State points out, even Texas cases with the same names as those cited by Lee do not correspond with the propositions relied upon.’”

Well, this is an awkward situation. Look, no lawyer is perfect, and we all make mistakes and are our own worse critics, but to not verify that the cases you put before the court are real is just a bridge too far for me.

- A Colorado attorney was suspended for using bogus cases generated by ChatGPT in a motion. This was the state bar’s first disciplinary decision involving AI. Below is a text exchange between the offending lawyer and their paralegal:

“"I think all of my case cites from ChatGPT are garbage," he texted his paralegal. "I have no idea what to do. I am trying to find actual case law in our favor now to present to the judge."”

Again, verifying the results promulgated by ChatGPT or any generative AI program would’ve saved the attorney from abject humiliation (and suspension) here.

- A prominent law firm in Los Angeles was caught using ChatGPT to draft briefs which, you guessed it, contained fabricated cases. They were sanctioned.

- Fugees rapper Prakazrel Michel claimed that his lawyer’s use of AI helped tank his case, and filed a Motion for New Trial because of it.

How do I avoid this outcome?

First, be aware of your ethical obligations and responsibilities surrounding generative AI. States like Florida, California, New York, and Texas (among many others) have promulgated opinions about AI use. The vast majority of states are not making brand new AI rules, rather they are making clear that the existing rules also apply to AI. Your duty to supervise doesn’t stop at your staff, it now extends to your AI software. You still must uphold your duty of confidentiality, which is a big problem for a lot of AI software companies. Many times, the data or text you enter an app like ChatGPT is then shared with third parties, which in turn lands you in sanctions territory.

The best way to protect yourself from client confidentiality issues is to use an AI product that has proper guardrails in place to keep you compliant with your state bar. For instance, Smokeball’s AI technology ensures that your data is not shared with third parties. It also gives you the ability to doublecheck and verify the AI’s output, so you can avoid the horror shows above.

So if I ask Smokeball’s AI program, Archie, for a summary of the marital settlement agreement I have in a family law case, it is going to synthesize that data, provide me with a great summary, and will link the specific document it’s gotten that data from so I can check it for accuracy.

Remember that although AI is wonderful and powerful and is set to revolutionize your firm, it’s still a bumbling summer associate that needs constant supervision.

It all boils down to this: don’t be dumb.

Use AI responsibly with a company that understands your ethical obligations, but most of all, have fun with it! This truly will be a boon for your firm. I highly encourage you to sign up for a demo for Smokeball’s AI features, or, if you’re looking to up your generative AI game, watch my webinar on Learning to Speak AI: An Attorney’s Guide to Prompt-Writing. Just dive in!

Learn more about Smokeball document management for law firms:

Book Your Free Demo

Ready to see how Smokeball client intake software helps you Run Your Best Firm? Schedule your free demo!

%20(1).webp)